Update: The SEO for iWeb Walkthrough Video Tutorial has been released. It walks you through the entire process of optimizing your iWeb website for search engines, explaining everything step by step.

We always get questions from iWeb users asking how they can improve their website rankings. We also get comments that say RAGE Sitemap Automator doesn’t find all web pages on an iWeb site. Well there are a few reasons for this that we will discuss here.

UPDATE: As of version 2.0 of Sitemap Automator it will properly scan iWeb based websites, making it one of the only tools that is able to do this properly. However, the following tips are still essential for success with search engines. See our step by step video guide on creating an XML Sitemap file.

iWeb websites are not made to be search engine friendly. In fact, almost all iWeb based websites that we get are really hurting their search engine rankings. If you follow these few simple instructions, you will see some significant improvements.

1) iWeb Page Titles

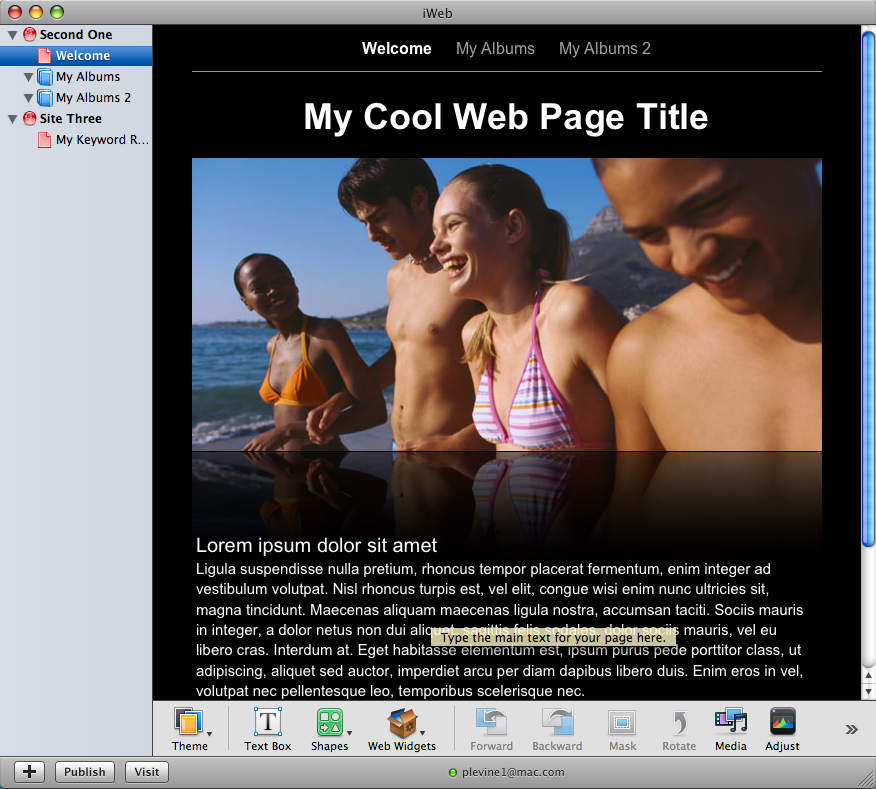

As of iWeb 08 (and now iWeb 09), most built in templates have a large header caption at the top of the page. Your website’s title tag will actually reflect what you enter here. Many users simply keep this as the default caption, not utilizing the most important on-page optimization you can use for search engines.

The trick is to give your page a title that includes both the keywords you want to appear for in search engines and that accurately describes your website content. Your web page title appears at the very top of your web browser, and in a search engines results page. Search engines use your title tag to get an idea of what they will find on your website.

Update: With iWeb SEO Tool you can now edit your web page titles, meta tags and alternative image text after you publish your site. You no longer have to worry about how iWeb gets your title tag.

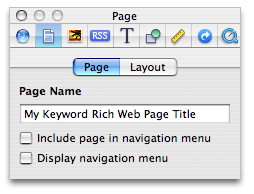

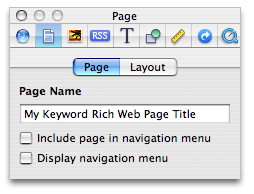

For templates without these header captions, or if you remove the caption, iWeb will use your page file name as its title. Below is a screen shot of your iWeb Inspector window which lets you edit the file name of your selected web page. Give it a good title using the advice we provided above.

2) Navigation bars

One of the biggest problems with iWeb is the way it creates your navigation bars. Instead of using standard HTML which search engines can use to correctly find all files on your website, it uses Javascript which makes it extremely hard for search engines to scan and index your website properly.

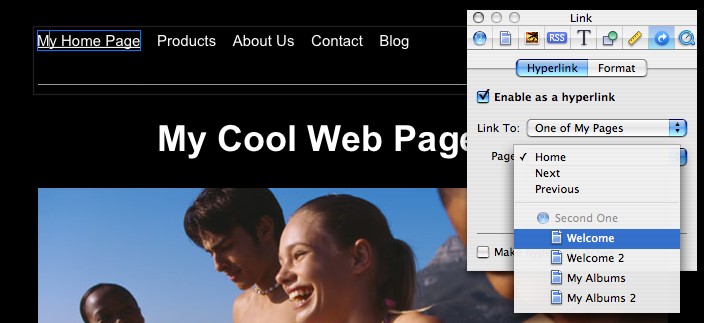

Fortunately there is a way you can work around this problem. Select your main page (or the first page that contains your navigation bar) and open the Inspector window. Click the Page Inspector tab (second tab) and deselect the option ‘Display Navigation Bar’ as shown in the following screen shot;

Now you’re going to create your own navigation bar with proper links to each of your pages. Create a new Text Box field and place it at the top of your page, while moving all your other content down. To quickly move all content down, go to Edit – Select All. Hold the shift key as you drag all your content down which will help insure you don’t accidentally move the content off center. Put the new Text Box at the top of your page and add captions for each of your pages separated using tabs or spaces so that they look like a proper navigation bar. Then select each caption and go to the ‘Link Inspector’ tab in the Inspector window. Select ‘Enable as a hyperlink’ and choose ‘One of My Pages’ from the ‘Link To’ drop down menu. Lastly select the page you want to link to from the ‘Page’ drop down menu.

Although you should do this for each of your pages for best results, insuring you do it on your main page only is extremely helpful for search engines.

3) The Right Content

One of the biggest issues I see with iWeb created websites is users choosing non-standard web fonts for their website. Just to provide some background information, there are a number of fonts that are considered safe for use on the web. These are fonts that are guaranteed to be installed on a users computer no matter what operating system or web browser they use. If a font is not installed on a users computer and you use it on your website, it will not display properly for them. iWeb works around this issue by turning your text into pictures if you use a non-standard font. This is why your webpage always looks the same no matter where you view it. Unfortunately, search engines can not ‘read’ text that is turned into pictures and this will severely impact your potential search engines rankings.

You must stick to the standard web fonts, which are listed below for you. This insures that your website has the best possible chance of ranking high for the keywords you are targeting.

Web safe fonts include;

- Arial

- Courier New

- Georgia

- Times New Roman

- Verdana

- Trebuchet MS

- Helvetica

*Note: Some of the above fonts may not always be installed on a persons computer but will be easily replaced with a very similar looking alternative if they can not be found. That is why they have all been included in the above list.

You want to make sure that your website content contains the keywords you want to rank high for in search engines. It’s not good enough to “be in search engines”, you want to appear when a potential customer types in one of your keywords. Search Engines will not know what your webpage is about unless you include the proper keywords in your web page content.

4) iWeb Landing Pages

Lastly, something I see very often is users making a so called “landing page” as their home page. This is the type of page where it may simply show your company logo with a “Click here to enter link”. Basically anything that requires a user to take one more step in order to see your website is never a good thing.

This applies to search engines as well. Your home page is considered your most important page by default so make sure you are taking full advantage of it. Link to other important pages directly from your home page and make sure it includes keyword rich content.

In the next post I will go over some iWeb misconceptions as well as some search engine misconceptions that can typically affect iWeb users.

Remember, search engines will not simply choose your site out of the billions out there and rank them at the top of their search index unless you give them good reason too. Getting high in search engines for the keywords your customers are searching for can be extremely profitable to you and will take some time to achieve. Don’t expect immediate results and keep learning about the strategies you can employ to get high rankings.

Download our Free Mac SEO Guide to learn how you can get higher rankings with your iWeb websites.